Talk on ‘Privacy-Fairness Tradeoff’ at Google Deepmind

This talk introduces individual DP and how it can be employed to reduce Fairness discrepacies.

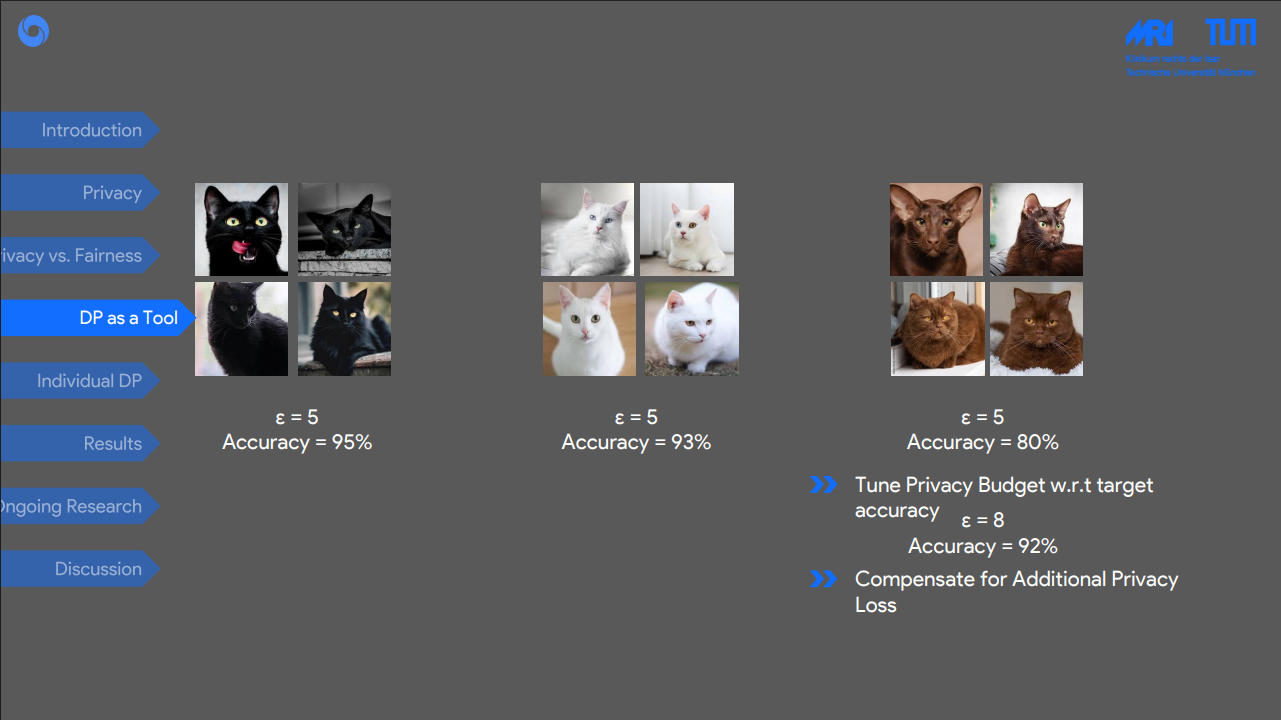

As machine learning systems become increasingly embedded in decision-making processes, concerns about fairness and privacy are intensifying. While previous work has highlighted the inherent trade-offs between these goals, we challenge the assumption that fairness and differential privacy are necessarily at odds. We propose a novel perspective: differential privacy, when strategically applied with group-specific privacy budgets, can serve as a lever to enhance group fairness. Our approach empowers underrepresented or disadvantaged groups to contribute data with higher privacy budgets, improving their model performance without harming others. We empirically demonstrate a “rising tide” effect, where increased privacy budgets for specific groups benefit others through shared information. Furthermore, we show that fairness improvements can be achieved by selectively increasing privacy budgets for only the most informative group members. Our results on FairFace and CheXpert datasets reveal that sacrificing privacy in a controlled, group-aware manner can reduce bias and enhance fairness, offering a positive-sum alternative to traditional fairness–accuracy or privacy–utility trade-offs.